DeepWiki provides real-time updated project documentation with more comprehensive and accurate content, suitable for quickly understanding the latest project information.

GitHub Access: https://github.com/apache/hugegraph

This is the multi-page printable view of this section. Click here to print.

DeepWiki provides real-time updated project documentation with more comprehensive and accurate content, suitable for quickly understanding the latest project information.

GitHub Access: https://github.com/apache/hugegraph

HugeGraph-Server is the core part of the HugeGraph Project, contains submodules such as graph-core, backend, API.

The Core Module is an implementation of the Tinkerpop interface; The Backend module is used to save the graph data to the data store. For version 1.7.0+, supported backends include: RocksDB (standalone default), HStore (distributed), HBase, and Memory. The API Module provides HTTP Server, which converts Client’s HTTP request into a call to Core Module.

⚠️ Important Change: Starting from version 1.7.0, legacy backends such as MySQL, PostgreSQL, Cassandra, and ScyllaDB have been removed. If you need to use these backends, please use version 1.5.x or earlier.

There will be two spellings HugeGraph-Server and HugeGraphServer in the document, and other modules are similar. There is no big difference in the meaning of these two ways, which can be distinguished as follows:

HugeGraph-Serverrepresents the code of server-related components,HugeGraphServerrepresents the service process.

You need to use Java 11 to run HugeGraph-Server (compatible with Java 8 before 1.5.0, but not recommended to use),

and configure by yourself.

Be sure to execute the java -version command to check the jdk version before reading

Note: Using Java8 will lose some security guarantees, we recommend using Java11 in production

There are four ways to deploy HugeGraph-Server components:

Note: If it’s exposed to the public network, must enable Auth authentication to ensure safety (so as the legacy version).

You can refer to the Docker deployment guide.

We can use docker run -itd --name=server -p 8080:8080 -e PASSWORD=xxx hugegraph/hugegraph:1.7.0 to quickly start a HugeGraph Server with a built-in RocksDB backend.

Optional:

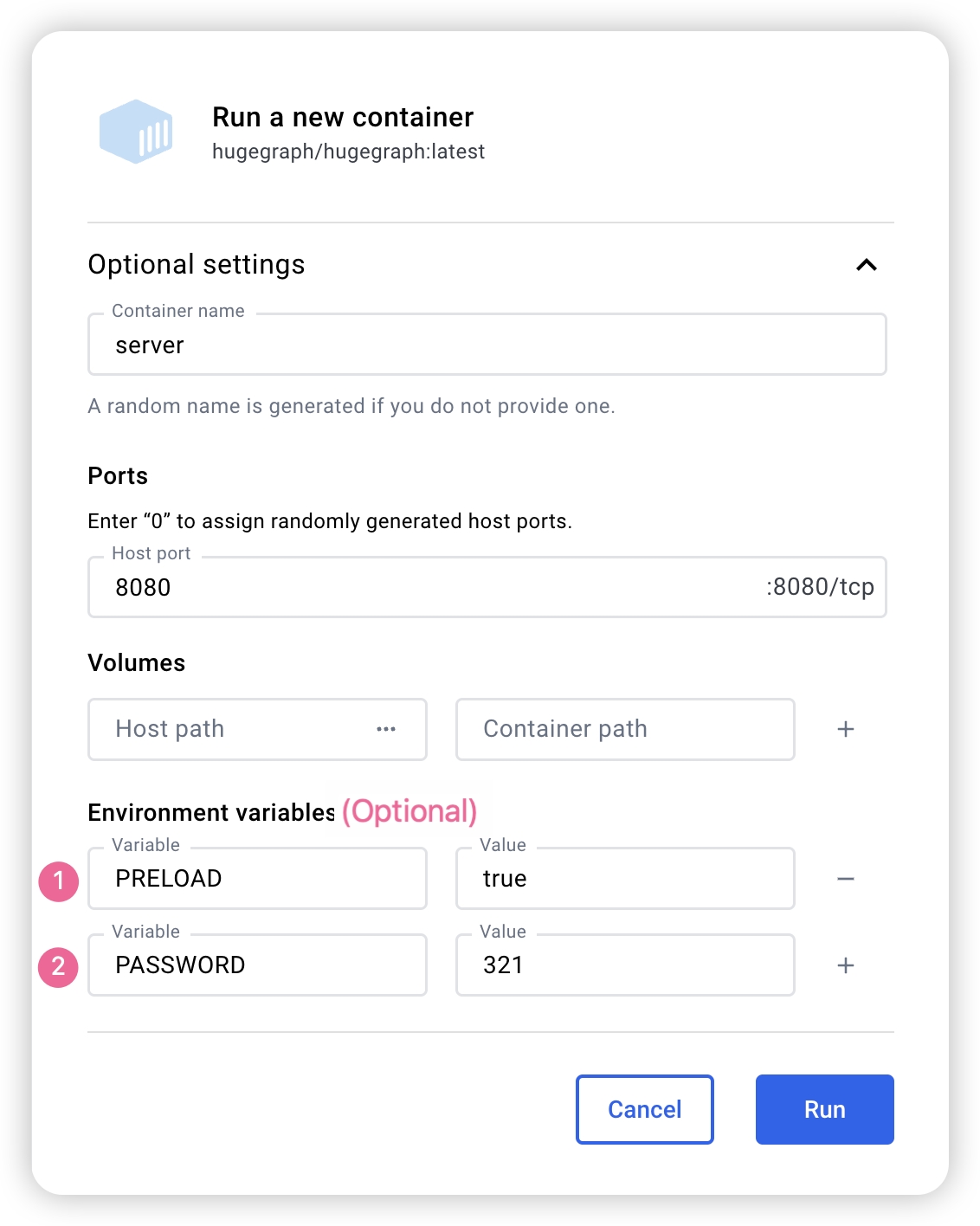

docker exec -it graph bash to enter the container to do some operations.docker run -itd --name=graph -p 8080:8080 -e PRELOAD="true" hugegraph/hugegraph:1.7.0 to start with a built-in example graph. We can use RESTful API to verify the result. The detailed step can refer to 5.1.8-e PASSWORD=xxx to enable auth mode and set the password for admin. You can find more details from Config AuthenticationIf you use docker desktop, you can set the option like:

Also, if we want to manage the other Hugegraph related instances in one file, we can use docker-compose to deploy, with the command docker-compose up -d (you can config only server). Here is an example docker-compose.yml:

version: '3'

services:

server:

image: hugegraph/hugegraph:1.7.0

container_name: server

environment:

- PASSWORD=xxx

# PASSWORD is an option to enable auth mode with the password you set.

# - PRELOAD=true

# PRELOAD is a option to preload a build-in sample graph when initializing.

ports:

- 8080:8080

Note:

The docker image of the hugegraph is a convenient release to start it quickly, but not official distribution artifacts. You can find more details from ASF Release Distribution Policy.

Recommend to use

release tag(like1.7.0/1.x.0) for the stable version. Uselatesttag to experience the newest functions in development.

You could download the binary tarball from the download page of the ASF site like this:

# use the latest version, here is 1.7.0 for example

wget https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-incubating-{version}.tar.gz

tar zxf *hugegraph*.tar.gz

# (Optional) verify the integrity with SHA512 (recommended)

shasum -a 512 apache-hugegraph-incubating-{version}.tar.gz

curl https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-incubating-{version}.tar.gz.sha512

Please ensure that the wget/curl commands are installed before compiling the source code

Download HugeGraph source code in either of the following 2 ways (so as the other HugeGraph repos/modules):

# Way 1. download release package from the ASF site

wget https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-incubating-src-{version}.tar.gz

tar zxf *hugegraph*.tar.gz

# (Optional) verify the integrity with SHA512 (recommended)

shasum -a 512 apache-hugegraph-incubating-src-{version}.tar.gz

curl https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-incubating-{version}-src.tar.gz.sha512

# Way2 : clone the latest code by git way (e.g GitHub)

git clone https://github.com/apache/hugegraph.git

Compile and generate tarball

cd *hugegraph

# (Optional) use "-P stage" param if you build failed with the latest code(during pre-release period)

mvn package -DskipTests -ntp

The execution log is as follows:

......

[INFO] Reactor Summary for hugegraph 1.5.0:

[INFO]

[INFO] hugegraph .......................................... SUCCESS [ 2.405 s]

[INFO] hugegraph-core ..................................... SUCCESS [ 13.405 s]

[INFO] hugegraph-api ...................................... SUCCESS [ 25.943 s]

[INFO] hugegraph-cassandra ................................ SUCCESS [ 54.270 s]

[INFO] hugegraph-scylladb ................................. SUCCESS [ 1.032 s]

[INFO] hugegraph-rocksdb .................................. SUCCESS [ 34.752 s]

[INFO] hugegraph-mysql .................................... SUCCESS [ 1.778 s]

[INFO] hugegraph-palo ..................................... SUCCESS [ 1.070 s]

[INFO] hugegraph-hbase .................................... SUCCESS [ 32.124 s]

[INFO] hugegraph-postgresql ............................... SUCCESS [ 1.823 s]

[INFO] hugegraph-dist ..................................... SUCCESS [ 17.426 s]

[INFO] hugegraph-example .................................. SUCCESS [ 1.941 s]

[INFO] hugegraph-test ..................................... SUCCESS [01:01 min]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

......

After successful execution, *hugegraph-*.tar.gz files will be generated in the hugegraph directory, which is the tarball generated by compilation.

HugeGraph-Tools provides a command-line tool for one-click deployment, users can use this tool to quickly download, decompress, configure and start HugeGraphServer and HugeGraph-Hubble with one click.

Of course, you should download the tarball of HugeGraph-Toolchain first.

# download toolchain binary package, it includes loader + tool + hubble

# please check the latest version (e.g. here is 1.7.0)

wget https://downloads.apache.org/hugegraph/1.7.0/apache-hugegraph-toolchain-incubating-1.7.0.tar.gz

tar zxf *hugegraph-*.tar.gz

# enter the tool's package

cd *hugegraph*/*tool*

note:

${version}is the version, The latest version can refer to Download Page, or click the link to download directly from the Download page

The general entry script for HugeGraph-Tools is bin/hugegraph, Users can use the help command to view its usage, here only the commands for one-click deployment are introduced.

bin/hugegraph deploy -v {hugegraph-version} -p {install-path} [-u {download-path-prefix}]

{hugegraph-version} indicates the version of HugeGraphServer and HugeGraphStudio to be deployed, users can view the conf/version-mapping.yaml file for version information, {install-path} specify the installation directory of HugeGraphServer and HugeGraphStudio, {download-path-prefix} optional, specify the download address of HugeGraphServer and HugeGraphStudio tarball, use default download URL if not provided, for example, to start HugeGraph-Server and HugeGraphStudio version 0.6, write the above command as bin/hugegraph deploy -v 0.6 -p services.

If you need to quickly start HugeGraph just for testing, then you only need to modify a few configuration items (see next section). For detailed configuration introduction, please refer to configuration document and introduction to configuration items

The startup is divided into “first startup” and “non-first startup.” This distinction is because the back-end database needs to be initialized before the first startup, and then the service is started. after the service is stopped artificially, or when the service needs to be started again for other reasons, because the backend database is persistent, you can start the service directly.

When HugeGraphServer starts, it will connect to the backend storage and try to check the version number of the backend storage. If the backend is not initialized or the backend has been initialized but the version does not match (old version data), HugeGraphServer will fail to start and give an error message.

If you need to access HugeGraphServer externally, please modify the restserver.url configuration item of rest-server.properties

(default is http://127.0.0.1:8080), change to machine name or IP address.

Since the configuration (hugegraph.properties) and startup steps required by various backends are slightly different, the following will introduce the configuration and startup of each backend one by one.

Note: Configure Server Authentication before starting HugeGraphServer if you need Auth mode (especially for production or public network environments).

Distributed storage is a new feature introduced after HugeGraph 1.5.0, which implements distributed data storage and computation based on HugeGraph-PD and HugeGraph-Store components.

To use the distributed storage engine, you need to deploy HugeGraph-PD and HugeGraph-Store first. See HugeGraph-PD Quick Start and HugeGraph-Store Quick Start.

After ensuring that both PD and Store services are started, modify the hugegraph.properties configuration of HugeGraph-Server:

backend=hstore

serializer=binary

task.scheduler_type=distributed

# PD service address, multiple PD addresses are separated by commas, configure PD's RPC port

pd.peers=127.0.0.1:8686,127.0.0.1:8687,127.0.0.1:8688

# Simple example (with authentication)

gremlin.graph=org.apache.hugegraph.auth.HugeFactoryAuthProxy

# Specify storage backend hstore

backend=hstore

serializer=binary

store=hugegraph

# Specify the task scheduler (for versions 1.7.0 and earlier, hstore storage is required)

task.scheduler_type=distributed

# pd config

pd.peers=127.0.0.1:8686

Then enable PD discovery in rest-server.properties (required for every HugeGraph-Server node):

usePD=true

# notice: must have this conf in 1.7.0

pd.peers=127.0.0.1:8686,127.0.0.1:8687,127.0.0.1:8688

# If auth is needed

# auth.authenticator=org.apache.hugegraph.auth.StandardAuthenticator

If configuring multiple HugeGraph-Server nodes, you need to modify the rest-server.properties configuration file for each node, for example:

Node 1 (Master node):

usePD=true

restserver.url=http://127.0.0.1:8081

gremlinserver.url=http://127.0.0.1:8181

pd.peers=127.0.0.1:8686

rpc.server_host=127.0.0.1

rpc.server_port=8091

server.id=server-1

server.role=master

Node 2 (Worker node):

usePD=true

restserver.url=http://127.0.0.1:8082

gremlinserver.url=http://127.0.0.1:8182

pd.peers=127.0.0.1:8686

rpc.server_host=127.0.0.1

rpc.server_port=8092

server.id=server-2

server.role=worker

Also, you need to modify the port configuration in gremlin-server.yaml for each node:

Node 1:

host: 127.0.0.1

port: 8181

Node 2:

host: 127.0.0.1

port: 8182

Initialize the database:

cd *hugegraph-${version}

bin/init-store.sh

Start the Server:

bin/start-hugegraph.sh

The startup sequence for using the distributed storage engine is:

Verify that the service is started properly:

curl http://localhost:8081/graphs

# Should return: {"graphs":["hugegraph"]}

The sequence to stop the services should be the reverse of the startup sequence:

bin/stop-hugegraph.sh

Update hugegraph.properties

backend=memory

serializer=text

The data of the Memory backend is stored in memory and cannot be persisted. It does not need to initialize the backend. This is the only backend that does not require initialization.

Start server

bin/start-hugegraph.sh

Starting HugeGraphServer...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)....OK

The prompted url is the same as the restserver.url configured in rest-server.properties

RocksDB is an embedded database that does not require manual installation and deployment. GCC version >= 4.3.0 (GLIBCXX_3.4.10) is required. If not, GCC needs to be upgraded in advance

Update hugegraph.properties

backend=rocksdb

serializer=binary

rocksdb.data_path=.

rocksdb.wal_path=.

Initialize the database (required on the first startup, or a new configuration was manually added under ‘conf/graphs/’)

cd *hugegraph-${version}

bin/init-store.sh

Start server

bin/start-hugegraph.sh

Starting HugeGraphServer...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)....OK

ToplingDB (Beta): As a high-performance alternative to RocksDB, please refer to the configuration guide: ToplingDB Quick Start

⚠️ Deprecated: This backend has been removed starting from HugeGraph 1.7.0. If you need to use it, please refer to version 1.5.x documentation.

users need to install Cassandra by themselves, requiring version 3.0 or above, download link

Update hugegraph.properties

backend=cassandra

serializer=cassandra

# cassandra backend config

cassandra.host=localhost

cassandra.port=9042

cassandra.username=

cassandra.password=

#cassandra.connect_timeout=5

#cassandra.read_timeout=20

#cassandra.keyspace.strategy=SimpleStrategy

#cassandra.keyspace.replication=3

Initialize the database (required on the first startup, or a new configuration was manually added under ‘conf/graphs/’)

cd *hugegraph-${version}

bin/init-store.sh

Initing HugeGraph Store...

2017-12-01 11:26:51 1424 [main] [INFO ] org.apache.hugegraph.HugeGraph [] - Opening backend store: 'cassandra'

2017-12-01 11:26:52 2389 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Failed to connect keyspace: hugegraph, try init keyspace later

2017-12-01 11:26:52 2472 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Failed to connect keyspace: hugegraph, try init keyspace later

2017-12-01 11:26:52 2557 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Failed to connect keyspace: hugegraph, try init keyspace later

2017-12-01 11:26:53 2797 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Store initialized: huge_graph

2017-12-01 11:26:53 2945 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Store initialized: huge_schema

2017-12-01 11:26:53 3044 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Store initialized: huge_index

2017-12-01 11:26:53 3046 [pool-3-thread-1] [INFO ] org.apache.hugegraph.backend.Transaction [] - Clear cache on event 'store.init'

2017-12-01 11:26:59 9720 [main] [INFO ] org.apache.hugegraph.HugeGraph [] - Opening backend store: 'cassandra'

2017-12-01 11:27:00 9805 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Failed to connect keyspace: hugegraph1, try init keyspace later

2017-12-01 11:27:00 9886 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Failed to connect keyspace: hugegraph1, try init keyspace later

2017-12-01 11:27:00 9955 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Failed to connect keyspace: hugegraph1, try init keyspace later

2017-12-01 11:27:00 10175 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Store initialized: huge_graph

2017-12-01 11:27:00 10321 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Store initialized: huge_schema

2017-12-01 11:27:00 10413 [main] [INFO ] org.apache.hugegraph.backend.store.cassandra.CassandraStore [] - Store initialized: huge_index

2017-12-01 11:27:00 10413 [pool-3-thread-1] [INFO ] org.apache.hugegraph.backend.Transaction [] - Clear cache on event 'store.init'

Start server

bin/start-hugegraph.sh

Starting HugeGraphServer...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)....OK

⚠️ Deprecated: This backend has been removed starting from HugeGraph 1.7.0. If you need to use it, please refer to version 1.5.x documentation.

users need to install ScyllaDB by themselves, version 2.1 or above is recommended, download link

Update hugegraph.properties

backend=scylladb

serializer=scylladb

# cassandra backend config

cassandra.host=localhost

cassandra.port=9042

cassandra.username=

cassandra.password=

#cassandra.connect_timeout=5

#cassandra.read_timeout=20

#cassandra.keyspace.strategy=SimpleStrategy

#cassandra.keyspace.replication=3

Since the scylladb database itself is an “optimized version” based on cassandra, if the user does not have scylladb installed, they can also use cassandra as the backend storage directly. They only need to change the backend and serializer to scylladb, and the host and post point to the seeds and port of the cassandra cluster. Yes, but it is not recommended to do so, it will not take advantage of scylladb itself.

Initialize the database (required on the first startup, or a new configuration was manually added under ‘conf/graphs/’)

cd *hugegraph-${version}

bin/init-store.sh

Start server

bin/start-hugegraph.sh

Starting HugeGraphServer...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)....OK

users need to install HBase by themselves, requiring version 2.0 or above,download link

Update hugegraph.properties

backend=hbase

serializer=hbase

# hbase backend config

hbase.hosts=localhost

hbase.port=2181

# Note: recommend to modify the HBase partition number by the actual/env data amount & RS amount before init store

# it may influence the loading speed a lot

#hbase.enable_partition=true

#hbase.vertex_partitions=10

#hbase.edge_partitions=30

Initialize the database (required on the first startup, or a new configuration was manually added under ‘conf/graphs/’)

cd *hugegraph-${version}

bin/init-store.sh

Start server

bin/start-hugegraph.sh

Starting HugeGraphServer...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)....OK

for more other backend configurations, please refer tointroduction to configuration options

⚠️ Deprecated: This backend has been removed starting from HugeGraph 1.7.0. If you need to use it, please refer to version 1.5.x documentation.

Because MySQL is licensed under the GPL and incompatible with the Apache License, users must install MySQL themselves, download link

Download the MySQL driver package, such as mysql-connector-java-8.0.30.jar, and place it in the lib directory of HugeGraph-Server.

Update hugegraph.properties to configure the database URL, username, and password.

store is the database name; it will be created automatically if it doesn’t exist.

backend=mysql

serializer=mysql

store=hugegraph

# mysql backend config

jdbc.driver=com.mysql.cj.jdbc.Driver

jdbc.url=jdbc:mysql://127.0.0.1:3306

jdbc.username=

jdbc.password=

jdbc.reconnect_max_times=3

jdbc.reconnect_interval=3

jdbc.ssl_mode=false

Initialize the database (required for first startup or when manually adding new configurations to conf/graphs/)

cd *hugegraph-${version}

bin/init-store.sh

Start server

bin/start-hugegraph.sh

Starting HugeGraphServer...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)....OK

Carry the -p true arguments when starting the script, which indicates preload, to create a sample graph.

bin/start-hugegraph.sh -p true

Starting HugeGraphServer in daemon mode...

Connecting to HugeGraphServer (http://127.0.0.1:8080/graphs)......OK

And use the RESTful API to request HugeGraphServer and get the following result:

> curl "http://localhost:8080/graphs/hugegraph/graph/vertices" | gunzip

{"vertices":[{"id":"2:lop","label":"software","type":"vertex","properties":{"name":"lop","lang":"java","price":328}},{"id":"1:josh","label":"person","type":"vertex","properties":{"name":"josh","age":32,"city":"Beijing"}},{"id":"1:marko","label":"person","type":"vertex","properties":{"name":"marko","age":29,"city":"Beijing"}},{"id":"1:peter","label":"person","type":"vertex","properties":{"name":"peter","age":35,"city":"Shanghai"}},{"id":"1:vadas","label":"person","type":"vertex","properties":{"name":"vadas","age":27,"city":"Hongkong"}},{"id":"2:ripple","label":"software","type":"vertex","properties":{"name":"ripple","lang":"java","price":199}}]}

This indicates the successful creation of the sample graph.

In 3.1 Use Docker container, we have introduced how to use docker to deploy hugegraph-server. server can also preload an example graph by setting the parameter.

⚠️ Deprecated: Cassandra backend has been removed starting from HugeGraph 1.7.0. If you need to use it, please refer to version 1.5.x documentation.

When using Docker, we can use Cassandra as the backend storage. We highly recommend using docker-compose directly to manage both the server and Cassandra.

The sample docker-compose.yml can be obtained on GitHub, and you can start it with docker-compose up -d. (If using Cassandra 4.0 as the backend storage, it takes approximately two minutes to initialize. Please be patient.)

version: "3"

services:

graph:

image: hugegraph/hugegraph

container_name: cas-server

ports:

- 8080:8080

environment:

hugegraph.backend: cassandra

hugegraph.serializer: cassandra

hugegraph.cassandra.host: cas-cassandra

hugegraph.cassandra.port: 9042

networks:

- ca-network

depends_on:

- cassandra

healthcheck:

test: ["CMD", "bin/gremlin-console.sh", "--" ,"-e", "scripts/remote-connect.groovy"]

interval: 10s

timeout: 30s

retries: 3

cassandra:

image: cassandra:4

container_name: cas-cassandra

ports:

- 7000:7000

- 9042:9042

security_opt:

- seccomp:unconfined

networks:

- ca-network

healthcheck:

test: ["CMD", "cqlsh", "--execute", "describe keyspaces;"]

interval: 10s

timeout: 30s

retries: 5

networks:

ca-network:

volumes:

hugegraph-data:

In this YAML file, configuration parameters related to Cassandra need to be passed as environment variables in the format of hugegraph.<parameter_name>.

Specifically, in the configuration file hugegraph.properties , there are settings like backend=xxx and cassandra.host=xxx. To configure these settings during the process of passing environment variables, we need to prepend hugegraph. to these configurations, like hugegraph.backend and hugegraph.cassandra.host.

The rest of the configurations can be referenced under 4 config

Set the environment variable PRELOAD=true when starting Docker to load data during the execution of the startup script.

Use docker run

Use docker run -itd --name=server -p 8080:8080 -e PRELOAD=true hugegraph/hugegraph:1.7.0

Use docker-compose

Create docker-compose.yml as following. We should set the environment variable PRELOAD=true. example.groovy is a predefined script to preload the sample data. If needed, we can mount a new example.groovy to change the preload data.

version: '3'

services:

server:

image: hugegraph/hugegraph:1.7.0

container_name: server

environment:

- PRELOAD=true

- PASSWORD=xxx

ports:

- 8080:8080

Use docker-compose up -d to start the container

And use the RESTful API to request HugeGraphServer and get the following result:

> curl "http://localhost:8080/graphs/hugegraph/graph/vertices" | gunzip

{"vertices":[{"id":"2:lop","label":"software","type":"vertex","properties":{"name":"lop","lang":"java","price":328}},{"id":"1:josh","label":"person","type":"vertex","properties":{"name":"josh","age":32,"city":"Beijing"}},{"id":"1:marko","label":"person","type":"vertex","properties":{"name":"marko","age":29,"city":"Beijing"}},{"id":"1:peter","label":"person","type":"vertex","properties":{"name":"peter","age":35,"city":"Shanghai"}},{"id":"1:vadas","label":"person","type":"vertex","properties":{"name":"vadas","age":27,"city":"Hongkong"}},{"id":"2:ripple","label":"software","type":"vertex","properties":{"name":"ripple","lang":"java","price":199}}]}

This indicates the successful creation of the sample graph.

Use jps to see a service process

jps

6475 HugeGraphServer

curl request RESTfulAPI

echo `curl -o /dev/null -s -w %{http_code} "http://localhost:8080/graphs/hugegraph/graph/vertices"`

Return 200, which means the server starts normally.

The RESTful API of HugeGraphServer includes various types of resources, typically including graph, schema, gremlin, traverser and task.

graph contains vertices、edgesschema contains vertexlabels、 propertykeys、 edgelabels、indexlabelsgremlin contains various Gremlin statements, such as g.v(), which can be executed synchronously or asynchronouslytraverser contains various advanced queries including shortest paths, intersections, N-step reachable neighbors, etc.task contains query and delete with asynchronous taskshugegraphcurl http://localhost:8080/graphs/hugegraph/graph/vertices

explanation

Since there are many vertices and edges in the graph, for list-type requests, such as getting all vertices, getting all edges, etc., the server will compress the data and return it, so when use curl, you get a bunch of garbled characters, you can redirect to gunzip for decompression. It is recommended to use the Chrome browser + Restlet plugin to send HTTP requests for testing.

curl "http://localhost:8080/graphs/hugegraph/graph/vertices" | gunzip

The current default configuration of HugeGraphServer can only be accessed locally, and the configuration can be modified so that it can be accessed on other machines.

vim conf/rest-server.properties

restserver.url=http://0.0.0.0:8080

response body:

{

"vertices": [

{

"id": "2lop",

"label": "software",

"type": "vertex",

"properties": {

"price": [

{

"id": "price",

"value": 328

}

],

"name": [

{

"id": "name",

"value": "lop"

}

],

"lang": [

{

"id": "lang",

"value": "java"

}

]

}

},

{

"id": "1josh",

"label": "person",

"type": "vertex",

"properties": {

"name": [

{

"id": "name",

"value": "josh"

}

],

"age": [

{

"id": "age",

"value": 32

}

]

}

},

...

]

}

For the detailed API, please refer to RESTful-API

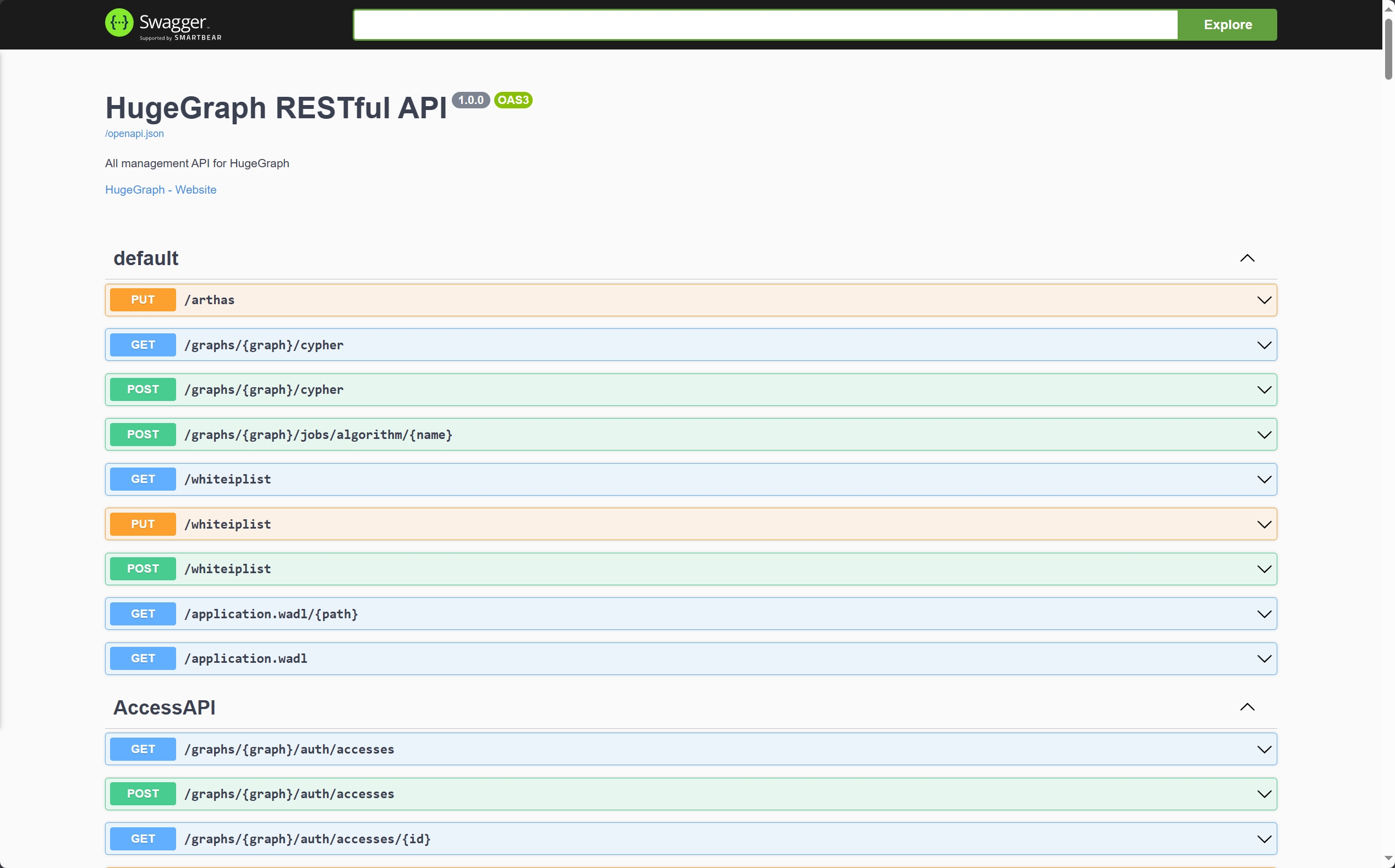

You can also visit localhost:8080/swagger-ui/index.html to check the API.

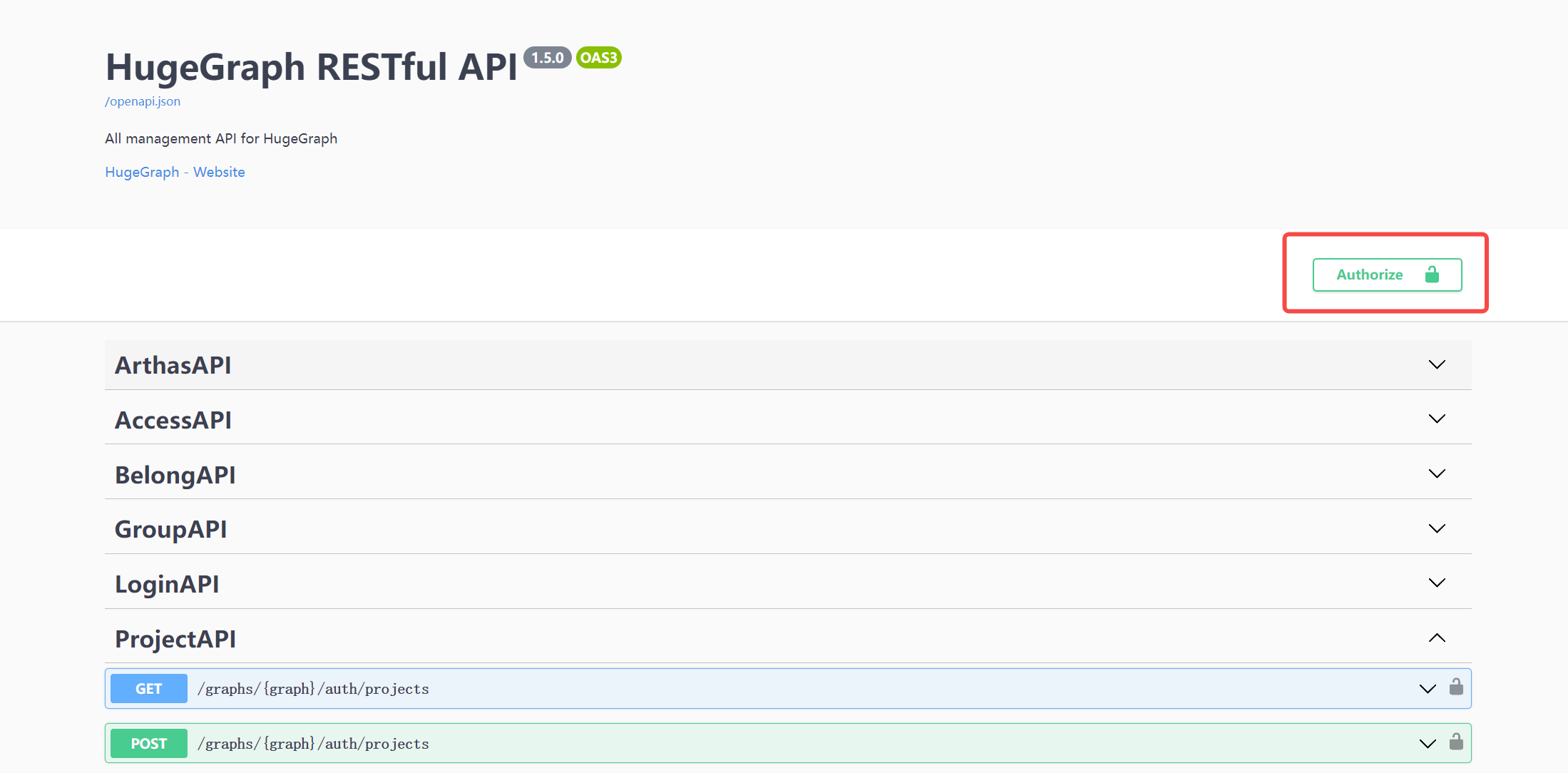

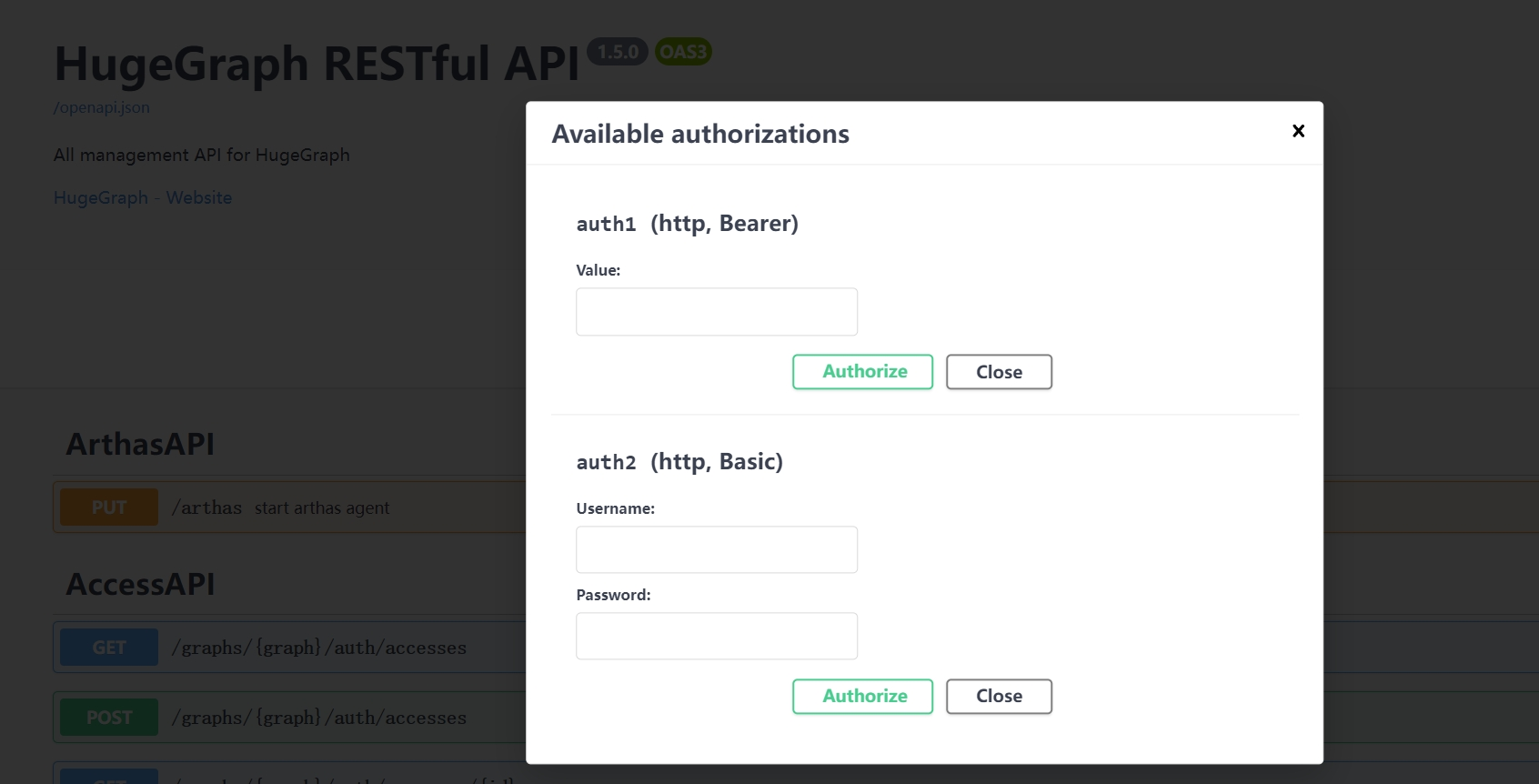

When using Swagger UI to debug the API provided by HugeGraph, if HugeGraph Server turns on authentication mode, you can enter authentication information on the Swagger page.

Currently, HugeGraph supports setting authentication information in two forms: Basic and Bearer.

$cd *hugegraph-${version}

$bin/stop-hugegraph.sh

Please refer to Setup Server in IDEA

HugeGraph-PD (Placement Driver) is the metadata management component of HugeGraph’s distributed version, responsible for managing the distribution of graph data and coordinating storage nodes. It plays a central role in distributed HugeGraph, maintaining cluster status and coordinating HugeGraph-Store storage nodes.

There are two ways to deploy the HugeGraph-PD component:

Download the latest version of HugeGraph-PD from the Apache HugeGraph official download page:

# Replace {version} with the latest version number, e.g., 1.5.0

wget https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-incubating-{version}.tar.gz

tar zxf apache-hugegraph-incubating-{version}.tar.gz

cd apache-hugegraph-incubating-{version}/apache-hugegraph-pd-incubating-{version}

# 1. Clone the source code

git clone https://github.com/apache/hugegraph.git

# 2. Build the project

cd hugegraph

mvn clean install -DskipTests=true

# 3. After successful compilation, the PD module build artifacts will be located at

# apache-hugegraph-incubating-{version}/apache-hugegraph-pd-incubating-{version}

# target/apache-hugegraph-incubating-{version}.tar.gz

The main configuration file for PD is conf/application.yml. Here are the key configuration items:

spring:

application:

name: hugegraph-pd

grpc:

# gRPC port for cluster mode

port: 8686

host: 127.0.0.1

server:

# REST service port

port: 8620

pd:

# Storage path

data-path: ./pd_data

# Auto-expansion check cycle (seconds)

patrol-interval: 1800

# Initial store list, stores in the list are automatically activated

initial-store-count: 1

# Store configuration information, format is IP:gRPC port

initial-store-list: 127.0.0.1:8500

raft:

# Cluster mode

address: 127.0.0.1:8610

# Raft addresses of all PD nodes in the cluster

peers-list: 127.0.0.1:8610

store:

# Store offline time (seconds). After this time, the store is considered permanently unavailable

max-down-time: 172800

# Whether to enable store monitoring data storage

monitor_data_enabled: true

# Monitoring data interval

monitor_data_interval: 1 minute

# Monitoring data retention time

monitor_data_retention: 1 day

initial-store-count: 1

partition:

# Default number of replicas per partition

default-shard-count: 1

# Default maximum number of replicas per machine

store-max-shard-count: 12

For multi-node deployment, you need to modify the port and address configurations for each node to ensure proper communication between nodes.

In the PD installation directory, execute:

./bin/start-hugegraph-pd.sh

After successful startup, you can see logs similar to the following in logs/hugegraph-pd-stdout.log:

2024-xx-xx xx:xx:xx [main] [INFO] o.a.h.p.b.HugePDServer - Started HugePDServer in x.xxx seconds (JVM running for x.xxx)

In the PD installation directory, execute:

./bin/stop-hugegraph-pd.sh

Confirm that the PD service is running properly:

curl http://localhost:8620/actuator/health

If it returns {"status":"UP"}, it indicates that the PD service has been successfully started.

Additionally, you can verify the status of the Store node by querying the PD API:

curl http://localhost:8620/v1/stores

If the Store is configured successfully, the response of the above interface should contain the status information of the current node. The status “Up” indicates that the node is running normally. Only the response of one node configuration is shown here. If all three nodes are configured successfully and are running, the storeId list in the response should contain three IDs, and the Up, numOfService, and numOfNormalService fields in stateCountMap should be 3.

{

"message": "OK",

"data": {

"stores": [

{

"storeId": 8319292642220586694,

"address": "127.0.0.1:8500",

"raftAddress": "127.0.0.1:8510",

"version": "",

"state": "Up",

"deployPath": "/Users/{your_user_name}/hugegraph/apache-hugegraph-incubating-1.5.0/apache-hugegraph-store-incubating-1.5.0/lib/hg-store-node-1.5.0.jar",

"dataPath": "./storage",

"startTimeStamp": 1754027127969,

"registedTimeStamp": 1754027127969,

"lastHeartBeat": 1754027909444,

"capacity": 494384795648,

"available": 346535829504,

"partitionCount": 0,

"graphSize": 0,

"keyCount": 0,

"leaderCount": 0,

"serviceName": "127.0.0.1:8500-store",

"serviceVersion": "",

"serviceCreatedTimeStamp": 1754027127000,

"partitions": []

}

],

"stateCountMap": {

"Up": 1

},

"numOfService": 1,

"numOfNormalService": 1

},

"status": 0

}

HugeGraph-Store is the storage node component of HugeGraph’s distributed version, responsible for actually storing and managing graph data. It works in conjunction with HugeGraph-PD to form HugeGraph’s distributed storage engine, providing high availability and horizontal scalability.

There are two ways to deploy the HugeGraph-Store component:

Download the latest version of HugeGraph-Store from the Apache HugeGraph official download page:

# Replace {version} with the latest version number, e.g., 1.5.0

wget https://downloads.apache.org/hugegraph/{version}/apache-hugegraph-incubating-{version}.tar.gz

tar zxf apache-hugegraph-incubating-{version}.tar.gz

cd apache-hugegraph-incubating-{version}/apache-hugegraph-hstore-incubating-{version}

# 1. Clone the source code

git clone https://github.com/apache/hugegraph.git

# 2. Build the project

cd hugegraph

mvn clean install -DskipTests=true

# 3. After successful compilation, the Store module build artifacts will be located at

# apache-hugegraph-incubating-{version}/apache-hugegraph-hstore-incubating-{version}

# target/apache-hugegraph-incubating-{version}.tar.gz

The main configuration file for Store is conf/application.yml. Here are the key configuration items:

pdserver:

# PD service address, multiple PD addresses are separated by commas (configure PD's gRPC port)

address: 127.0.0.1:8686

grpc:

# gRPC service address

host: 127.0.0.1

port: 8500

netty-server:

max-inbound-message-size: 1000MB

raft:

# raft cache queue size

disruptorBufferSize: 1024

address: 127.0.0.1:8510

max-log-file-size: 600000000000

# Snapshot generation time interval, in seconds

snapshotInterval: 1800

server:

# REST service address

port: 8520

app:

# Storage path, supports multiple paths separated by commas

data-path: ./storage

#raft-path: ./storage

spring:

application:

name: store-node-grpc-server

profiles:

active: default

include: pd

logging:

config: 'file:./conf/log4j2.xml'

level:

root: info

For multi-node deployment, you need to modify the following configurations for each Store node:

grpc.port (RPC port) for each noderaft.address (Raft protocol port) for each nodeserver.port (REST port) for each nodeapp.data-path (data storage path) for each nodeEnsure that the PD service is already started, then in the Store installation directory, execute:

./bin/start-hugegraph-store.sh

After successful startup, you can see logs similar to the following in logs/hugegraph-store-server.log:

2024-xx-xx xx:xx:xx [main] [INFO] o.a.h.s.n.StoreNodeApplication - Started StoreNodeApplication in x.xxx seconds (JVM running for x.xxx)

In the Store installation directory, execute:

./bin/stop-hugegraph-store.sh

Below is a configuration example for a three-node deployment:

For the three Store nodes, the main configuration differences are as follows:

Node A:

grpc:

port: 8500

raft:

address: 127.0.0.1:8510

server:

port: 8520

app:

data-path: ./storage-a

Node B:

grpc:

port: 8501

raft:

address: 127.0.0.1:8511

server:

port: 8521

app:

data-path: ./storage-b

Node C:

grpc:

port: 8502

raft:

address: 127.0.0.1:8512

server:

port: 8522

app:

data-path: ./storage-c

All nodes should point to the same PD cluster:

pdserver:

address: 127.0.0.1:8686,127.0.0.1:8687,127.0.0.1:8688

Confirm that the Store service is running properly:

curl http://localhost:8520/actuator/health

If it returns {"status":"UP"}, it indicates that the Store service has been successfully started.

Additionally, you can check the status of Store nodes in the cluster through the PD API:

curl http://localhost:8620/pd/api/v1/stores